In the realm of search engine optimization (SEO), it is essential to have a good understanding of technical SEO to achieve high rankings. Using powerful tools such as Semrush can simplify this process and save a lot of time and effort.

This guide will provide you with a detailed explanation of how to perform a technical SEO audit using Semrush to improve your website’s performance.

You will gain a better understanding of Semrush’s SEO dashboard, learn how to get the most out of the site audit tool, and gain valuable insights on how to address major site issues to make your website understandable for search engines like Google.

Before we jump into Semrush, let’s talk about technical SEO and why it’s important. It directly affects your website, and I’ll break down why

What is Technical SEO?

Technical SEO is the process of optimizing a website and ensuring that meets search engine requirements, intending to improve rankings. It includes elements such as crawlability, indexation, page speed, and more.

Why technical SEO is Important?

Technical SEO is important as it has a direct impact on the visibility of your website. When the search engine is unable to access your site and understand the web pages, the site will not appear in the search results.

Ensuring that your website is easily accessible and understandable to search engines is vital for presenting your content to your target audience.

Now, let’s take a quick overview of SEMrush, the comprehensive digital marketing tool we’ll be using today, and learn about some of its features.

What is Semrush?

Semrush is a powerful digital marketing platform with 55+ tools that can be used for keyword research, competitive analysis, content marketing, and more.

Semrush features

The digital marketing platform offers a wide range of features to help you improve your technical SEO in particular and your online visibility in general. These features include:

- Keyword Research: Semrush provides a detailed keyword analysis, including search volume, keyword difficulty, and related keywords.

- Competitive Analysis: With Semrush, you can compare your website’s performance to your competitors and identify opportunities for improvement.

- Backlink Analysis: Semrush enables you to analyze backlinks to your site and monitor link-building strategies.

- Site Audit: This feature helps you identify and fix any technical and on-page issues on your website that could affect its SEO performance.

- Content Marketing: Semrush offers various content planning, creation, and optimization tools to help you improve your overall content strategy.

- On-Page SEO: Semrush on-page SEO tool provides actionable recommendations to optimize individual web pages for search engines.

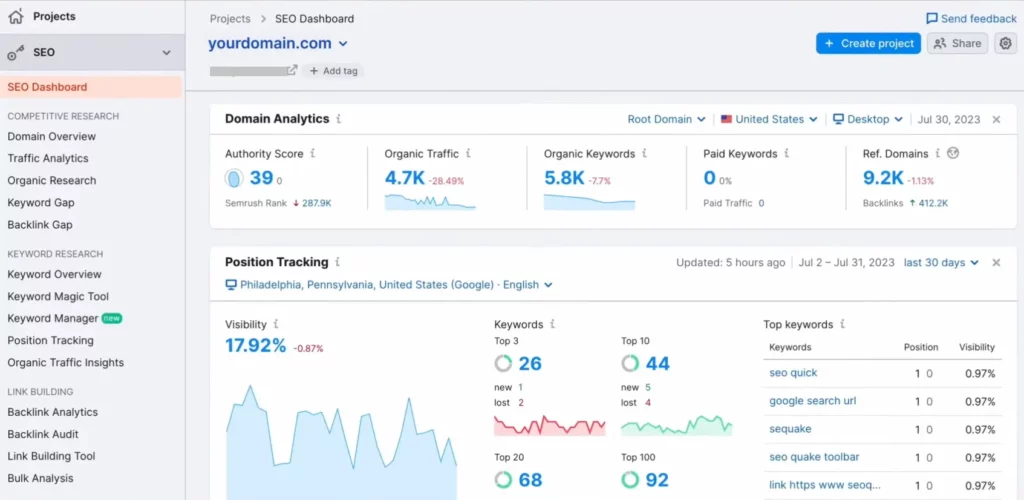

Semrush’s SEO Dashboard

Semrush’s SEO Dashboard enables you to get a quick overview of your website’s or your competitor’s performance. Showcasing key metrics like Top Organic Keywords, Visibility, Organic Traffic, Authority Score, Backlink Analytics, and Position Tracking.

To access reports you will need at least one project(website).

As you can see Semrush’s SEO dashboard is divided into widgets, from the top of the Domain Analytics widget, which compares how these metrics have performed compared to the previous 30 days.

The position tracking widget monitors the search rankings of your pages, subfolders, or domains across keywords and locations. It allows you to check your overall visibility and analyze your top-performing keywords.

Site Audit and On-Page SEO Checker are a pair of technical SEO tools designed to check your site’s health and offer recommendations for optimizing your site and current content.

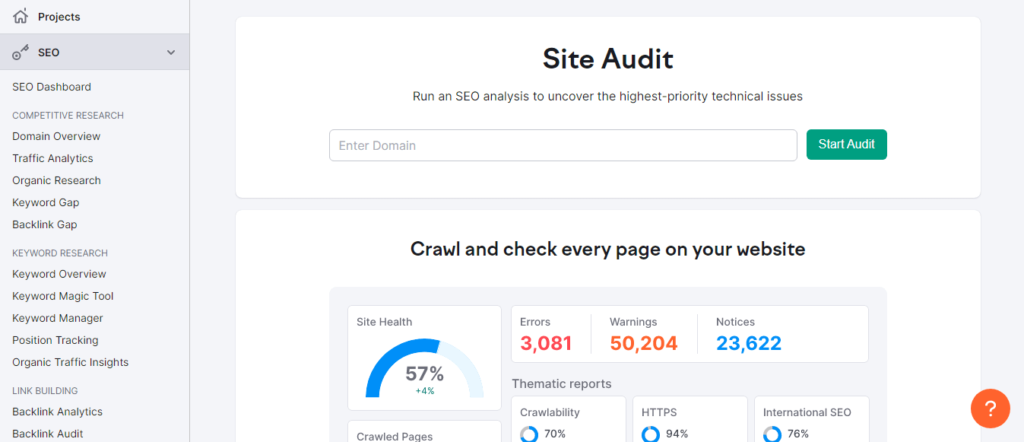

The Site Audit Tool

The site audit tool is a website crawler for checking how healthy your site is regarding technical SEO and on-page. It analyzes your site and looks at known factors that affect how well your site shows up in searches.

It provides you with a comprehensive list of technical SEO and on-page issues, making it easy to identify a website’s struggles.

The site audit tool runs over 140 technical and on-page SEO checks, which include crawlability, broken links, and duplicate content. You can start website crawling immediately or schedule automatic re-crawls on a daily or weekly basis.

How to perform a technical SEO audit?

To get started with Semrush’s site audit tool, you need to create a project for your domain. click here to sign up if you don’t have a Semrush account and set up a project.

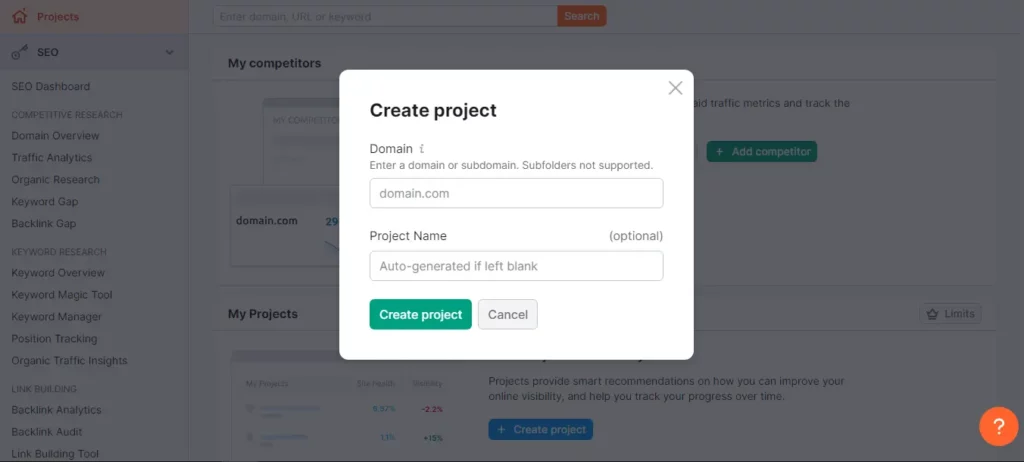

Navigate to projects and create a new project.

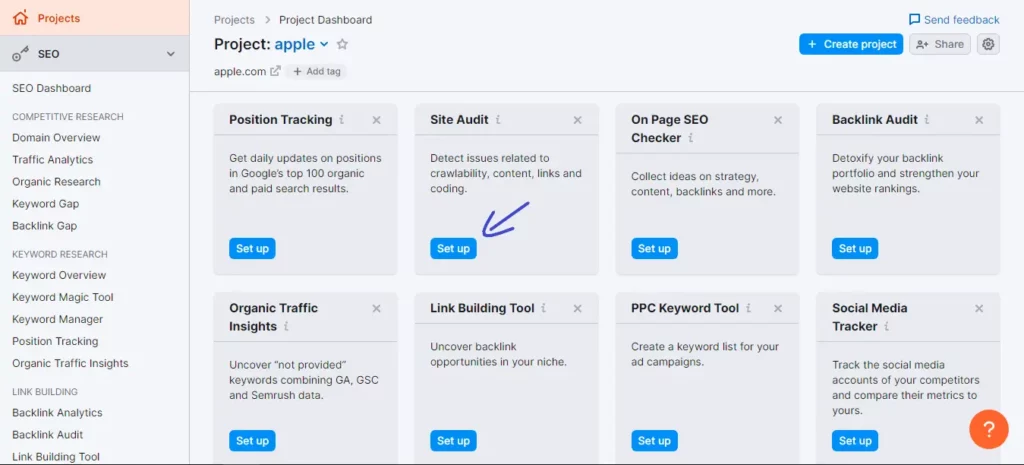

Enter your domain and give it a name then click Create Project. Next, select set up in the site audit block.

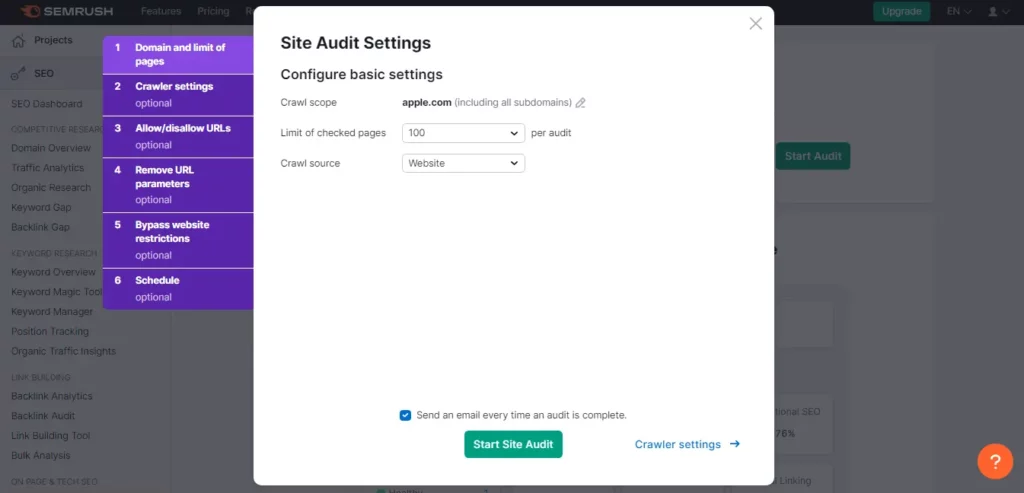

After that, you will have to configure your settings this involves selecting crawl scope, determining limits of checked pages, and crawl source.

The limits of checked pages depend on your Semrush account level, You will have a specific limit on the number of pages you can check during an audit.

- Free users: can crawl up to 100 pages per month.

- Pro users: up to 20,000 pages per audit.

- Guru users: up to 20,000 pages per audit.

- Business users: up to 100,000 pages per audit.

For the crawl source you choose between 4 options to determine how the Semrush bot will crawl your site:

- Website: This setting prompts the bot to crawl your website like GoogleBot. It will explore your site by following the links it discovers in your page’s code.

- Sitemap on-site: Choosing this will only crawl the pages included in your sitemap from your robots.txt file.

- Sitemap by URL: Similar to “Sitemap on site,” this allows you to specify the URL of your sitemap.

- File of URLs: With this choice, you can specify a group of pages on your website. It’s important to format your file correctly, with one URL per line, and upload it to the Semrush interface as a .csv or .txt file.

To get identical results, the site audit tool has various advanced options that you can adjust. Once you have finished configuring the options, click on the “start site audit” button to begin the auditing process. Please note that this may take a few minutes.

Site Audit Overview Report

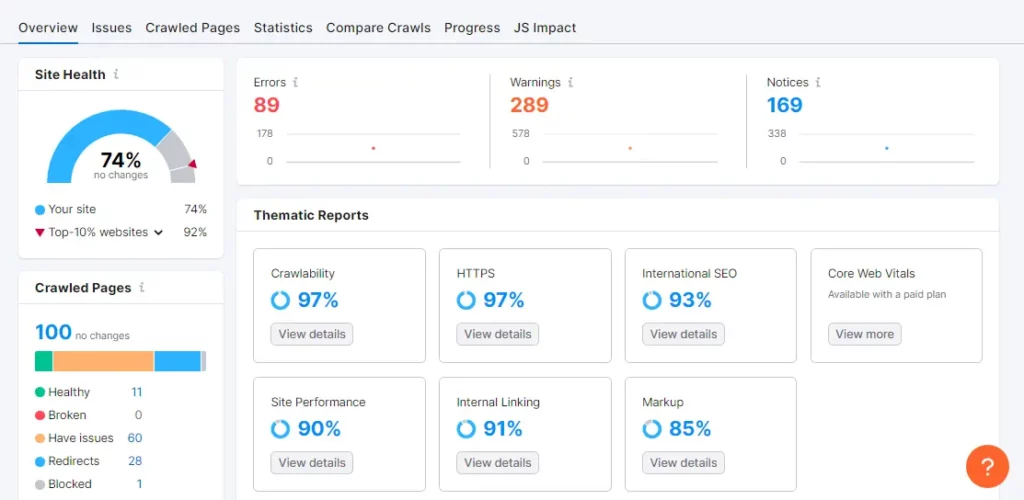

The Site Audit tool’s Overview Report provides a Site Health Score, indicating the overall health of your website based on technical SEO checks. It is represented as a percentage, with a higher percentage indicating a healthier site.

The report also displays the cumulative count of Errors, Warnings, and Notices. Errors are critical issues highlighted in red, warnings indicate moderate severity in orange, and notices provide less severe information in blue.

These notices offer helpful information for addressing site problems but have minimal impact on the overall site health score.

The Site Audit tool’s Thematic Reports cover various aspects of website optimization. These include crawlability, HTTPS transition, international SEO, performance, internal linking, Core Web Vitals (CWV), and markups.

The reports offer insights into specific issues such as page load speed, code minification, internal linking effectiveness, user experience factors like LCP, TBT, and CLS, as well as the implementation of structured data items.

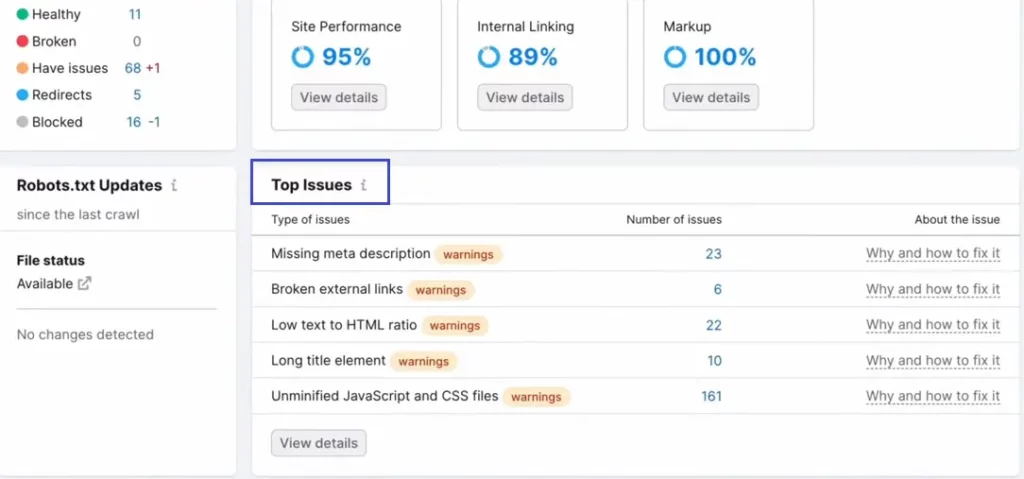

Top Issues

The overview report provides a clear picture of the site’s major issues and directs you to the Top Issues widget for actionable instructions on addressing these issues.

These issues are prioritized based on severity and the number of affected pages, allowing users to click on specific problems for more information.

By reviewing the comprehensive list of issues in the Issues tab, you can access instructions on how to address each problem, such as fixing broken links, resolving thin content, and adding missing H1s, or meta descriptions.

Upon addressing these issues, you can rerun the Site Audit to verify if the problems were resolved effectively, thereby optimizing the site for improved SEO and user experience performance.

The Semrush Site Audit tool offers users more than 140 checks, which help identify technical and on-page issues on their website, and also provide opportunities for improvement.

Additionally, you can opt to receive recurring email notifications once the audit is complete, so you can stay updated on your website’s progress.

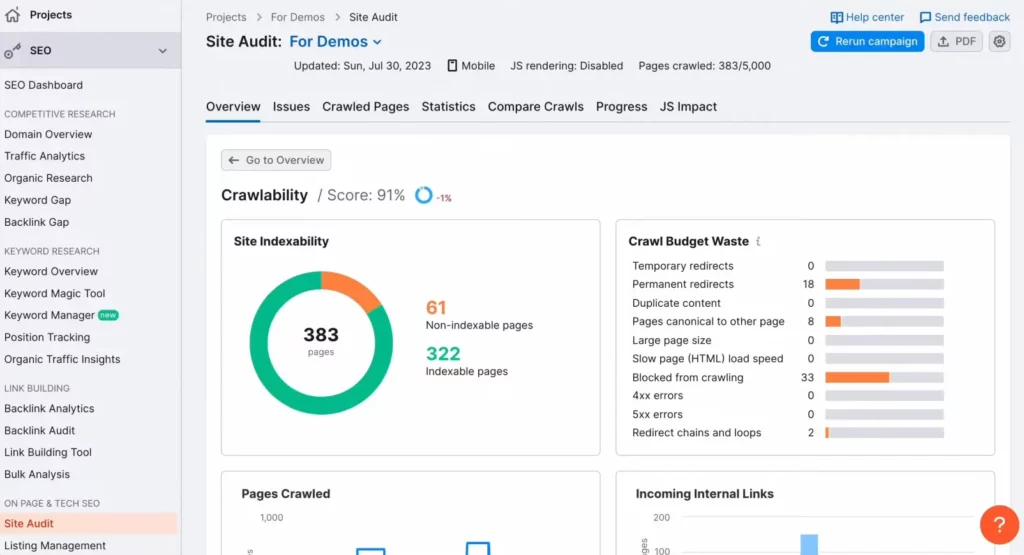

Crawlability Issues

Crawlability means the ability of search engine crawlers or bots to access web pages.

When your website has crawlability issues, This means that search engines like Google can’t access all its content due to broken links or dead ends. This can cause problems properly indexing the website, which may negatively affect its visibility on search engines.

The Crawlability report gives you a complete analysis of your website’s elements that affect how search engines crawl your site.

This page has graphs and sections such as site indexability, pages crawled, budget waste, and more. By clicking on a graph, you can see a filtered Crawled Pages report that shows you the specific pages where the issues were found.

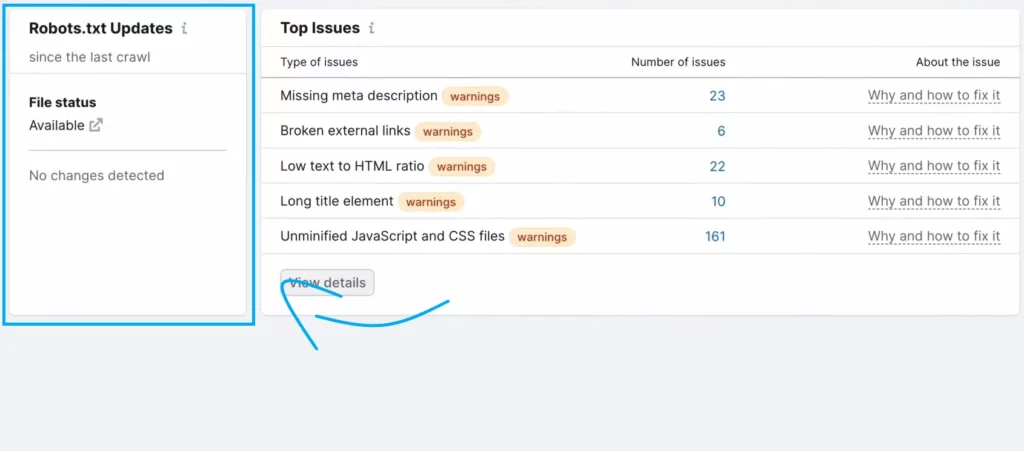

Robots.txt Issues

Robots.txt is a plain text file that instructs search engine bots on which URLs to crawl and which URLs to avoid. It helps to prevent overloading your website, keep search engine bots away from private folders, and define the sitemap location.

Checking the Robots.txt file is essential to a technical SEO audit and requires technical knowledge. Each line of code in this file can block search engine bots from accessing a webpage on your site.

To check your website’s robots.txt file, navigate to the site audit report overview and scroll down to find “Robots.txt Updates.”

In this context, you can check if the crawler has detected the robots.txt file on your website. If the file status is “Available,” you can review your robots.txt file by clicking the link icon next to it.

You also have the option to focus solely on the robots.txt file changes since the last crawl by clicking the “View changes” button.

in addition, to find further issues related to robots.txt, you can open the “Issues” tab in the relevant tool and search for “robots.txt.”

Some common issues that may appear include format errors in the robots.txt file, failure to indicate the sitemap.xml in robots.txt, and blocked internal resources.

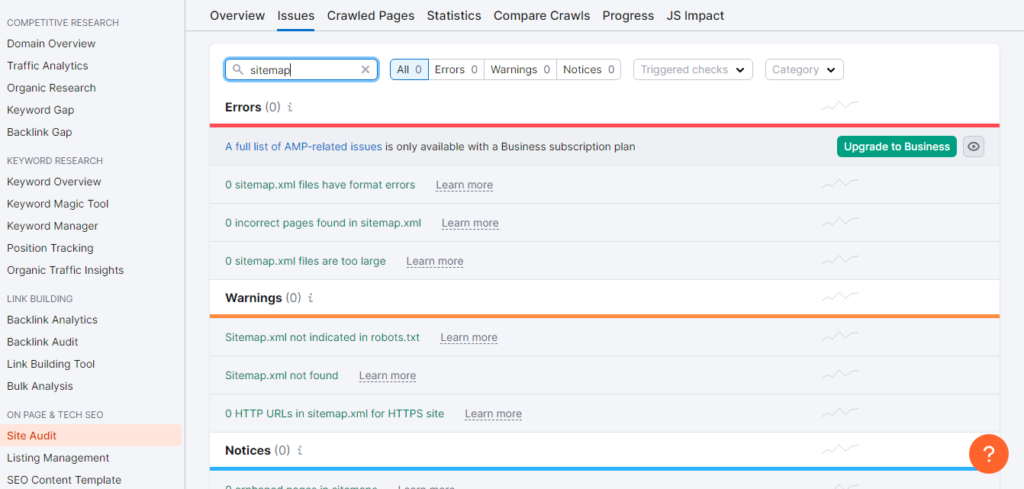

XML Sitemap Issues

XML sitemap is a file that contains a list of important website pages that you need to be indexed by search engines such as Google. This helps search engines to effectively crawl and locate the pages.

Simply go to the “Issues” tab and search for “sitemap” in the search field.

It is important to review your XML sitemap in every technical SEO audit to ensure that it contains the pages you want to rank and excludes pages like login and customer account pages.

Make sure that the sitemap does not include gated content. It is important to check if your sitemap works correctly.

Look for issues such as incorrect pages or format errors and use the Site Audit tool to detect any sitemap-related problems.

Site Architecture

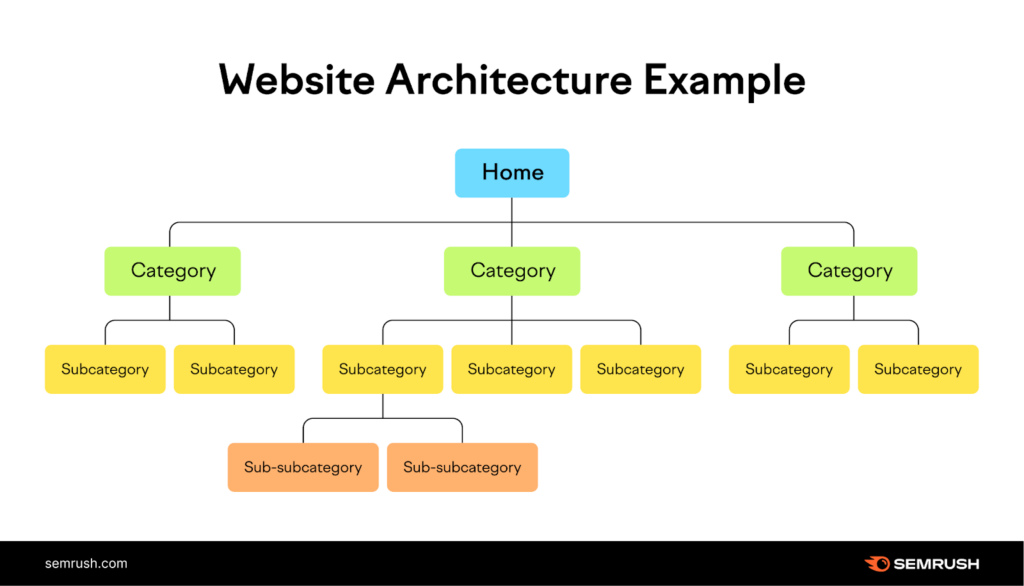

Site architecture refers to the hierarchical structure of pages on a website. it’s how your website pages are structured and connected.

This structure facilitates user navigation and search engine crawling to find the information they are looking for on your website.

Ideal site architecture is crucial for two main reasons: firstly, it lets search engines efficiently crawl and comprehend the relationships between your website’s pages; and secondly, it enhances user experience by making it easier for visitors to navigate your site.

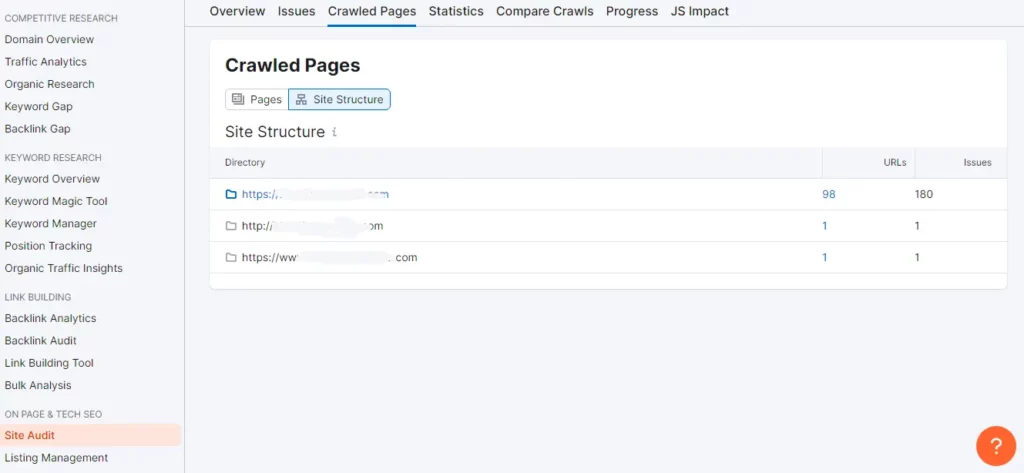

To view your site’s hierarchy, go to the “Site Audit Overview” report, click on “Crawled Pages,” and switch to “Site Structure“

💡 An ideal site structure allows users to find the desired page within three clicks from the homepage. If it takes more clicks, the site’s hierarchy is too deep, and search engines may consider deeper pages as less relevant or important.

To ensure all pages on a website are easily accessible, navigate to the “Crawled Pages” tab and switch to the “Pages” view. Click on “More filters” and choose “Crawl Depth” as “4+ clicks.” To resolve this issue, add internal links to the pages that are too deeply embedded in the site’s structure.

The site architecture contains two key aspects alongside site structure and those are Navigation and URL structure.

Navigation

Your website’s navigation, including menus, footer links, and breadcrumbs, should make it easy for users to find their way around.

Navigation is a crucial aspect of creating a well-structured site. Keep the navigation simple and avoid using complex mega menus.

The navigation must reflect the hierarchy of your pages, and utilizing breadcrumbs is a great way to achieve this.

URL structure

Your website’s URL structure should be consistent and user-friendly. Ensure that your URL slugs are user-friendly and adhere to best practices.

Conducting a site audit can help identify common URL issues, such as the use of underscores, excessive parameters, and overly long URLs.

Internal Linking Issues

Internal links are the links that point from one page to another on the same website. They are an important part of website architecture because they distribute the authority across your pages to help search engines identify important pages.

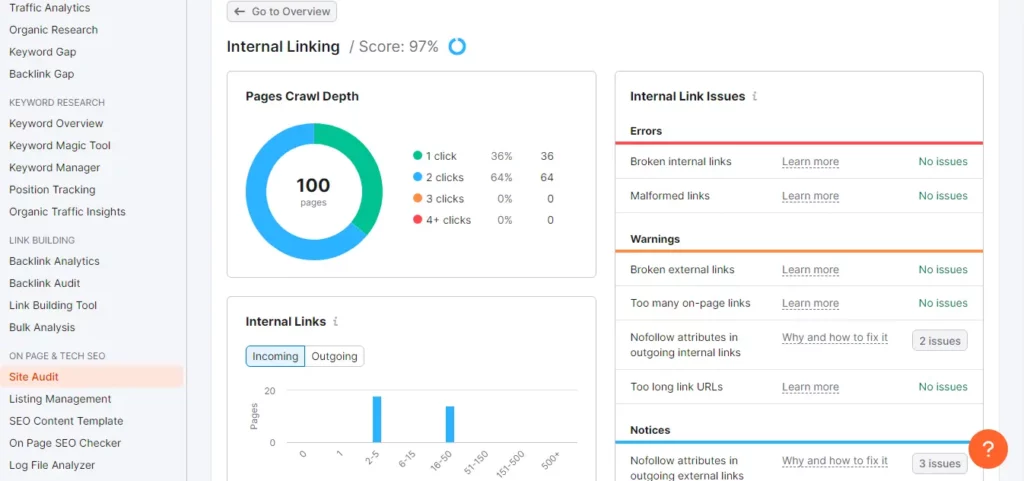

To check your internal linking, select “View details” under the “Internal Linking” score in the audit overview report.

This report provides insights into internal link issues on the website.

It highlights common problems like broken internal links and orphaned pages. It’s a simple process to fix broken links and orphaned pages by manually updating the broken links and adding at least one internal link to the orphaned pages.

The report also includes a graph showing the distribution of pages based on their Internal LinkRank (ILR), indicating the strength of internal linking for each page.

This metric helps identify pages that could benefit from more internal links and those that can be used to distribute link equity across the website.

Lastly, following internal linking best practices is essential for future maintenance, such as making internal linking a part of content creation, linking to relevant pages with relevant anchor text, and avoiding excessive internal linking.

Duplicate Content Issues

Duplicate content refers to identical or slightly rewritten content that appears across multiple pages within the same website.

Having duplicate content can lead to several problems such as appearing the wrong version of a webpage in the search results and indexing issues.

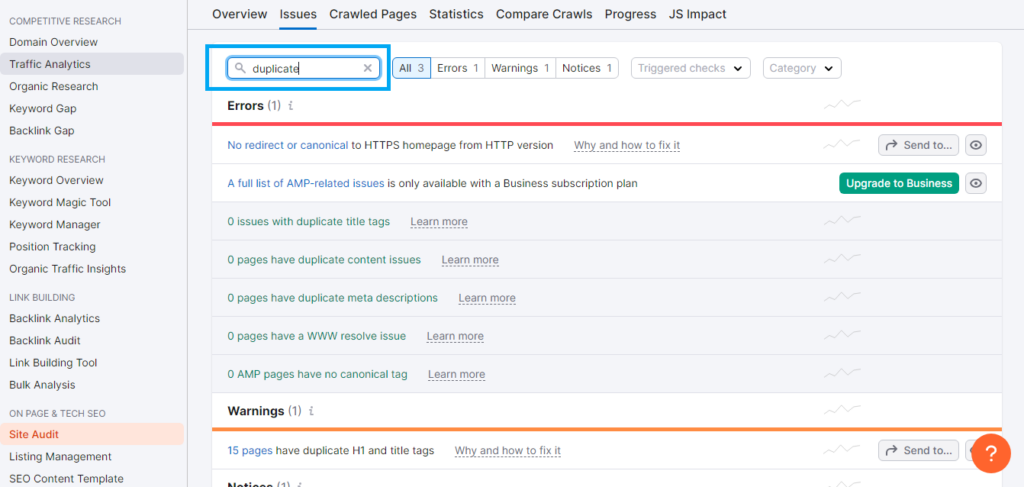

from the site audit overview report, you can find duplicate content by navigating to the “Issues” tab and searching for “duplicate”

When content reaches an identical percentage of 85%, it’s considered duplicate content. This often occurs due to multiple versions sharing the same URLs or when pages have different URL parameters.

Multiple Versions of URLs

Duplicate content issues can arise if a website has multiple versions of the URL, such as HTTP, HTTPS, www, and non-www.

Google recognizes these as different versions of the site, leading to duplicate content problems. To address this, it’s essential to choose a preferred version of the site and implement a sitewide 301 redirect, which ensures that only one version of the pages is accessible.

URL Parameters

URL parameters are additional elements inserted into the URL to sort and organize website content, often causing duplicate content issues as they are similar to URLs without parameters.

Google typically selects the best version for search results but recommends actions to reduce potential problems, such as reducing unnecessary parameters and using canonical tags.

When setting up an SEO audit, it’s recommended to avoid crawling pages with URL parameters. This ensures that only the desired pages are analyzed, excluding their parameter versions.

HTTP Issues

HTTP stands for Hypertext Transfer Protocol, and the (S) indicates that your website has a security certificate. Having a valid certificate is crucial as it protects your site from third-party attackers.

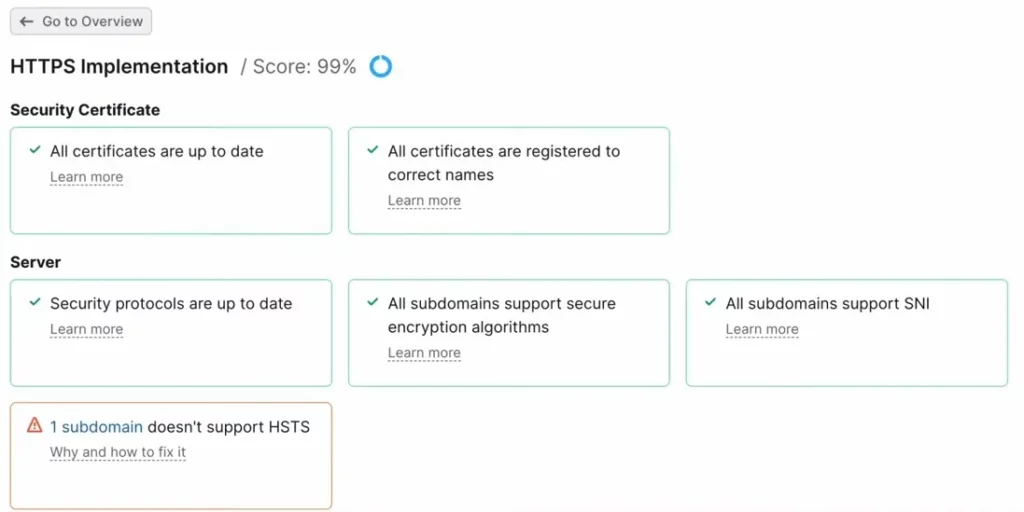

The HTTPS Implementation report in the Site Audit tool identifies potential security weaknesses related to security certificates, server alignment with Google’s security best practices, and the protection of website pages and elements with HTTPS.

It provides recommendations for resolving issues and emphasizes the importance of migrating from HTTP to HTTPS for building a trustworthy brand. The report’s insights and recommendations can help improve overall site health and security.

How to use the Site Audit Tool to check for HTTPS issues?

To migrate your website from HTTP to HTTPS, selecting a TLS certificate is the first step, with many options available, both paid and free. The transfer may take some time, and it’s normal for some issues to arise during the redirection process.

Utilizing plugins, such as those for WordPress, can help with redirecting links. After completing the migration steps, it’s essential to run the website through the Site Audit tool to identify any technical issues.

The audit tool will provide a prioritized list of issues to address, and progress can be tracked through subsequent audits. It’s crucial to address the most severe issues first and re-run the audit to monitor the improvements.

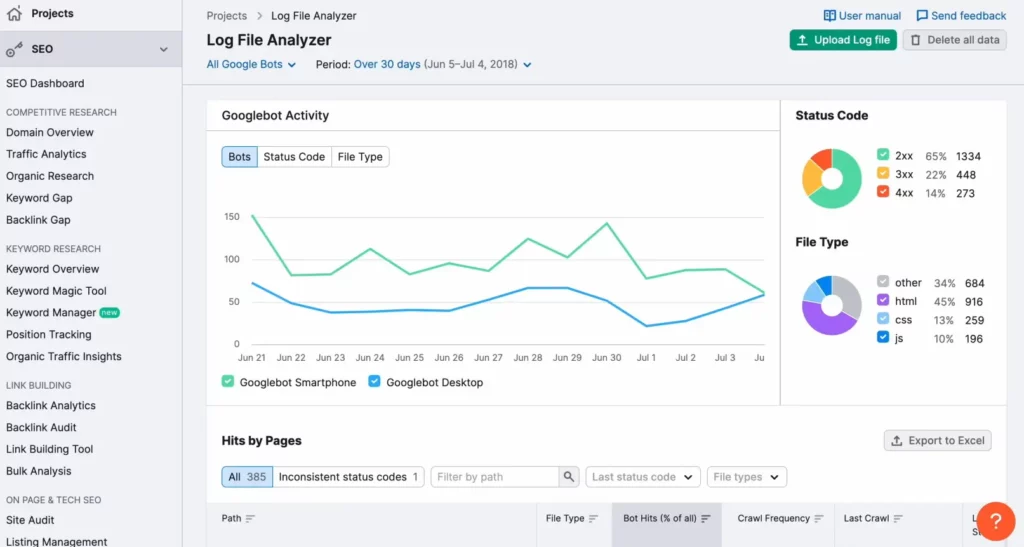

Log File Analysis

Log files keep a record of all activities on a website by both users and bots.

Analyzing these files provides valuable insights into how a website is performing. For instance, it helps identify frequently crawled pages, file types, bot activity, and status codes.

This analysis helps to identify opportunities for improvement, manage the crawl budget, and fix structural and navigational issues that may hinder page accessibility.

To understand how Google bots engage with your website, you can analyze its log file. To do that, check the /logs/ or /access_log/ folder and download the file. Then, upload it into the Log File Analyzer.

Final Thoughts

Conducting a technical SEO audit stands as a critical practice for enhancing online visibility. Using a great tool like Semrush simplifies this process!

So, If you haven’t got a Semrush account yet, sign up for a free trial and give it a try. It’s worth trying out!